vRealize Automation

Written by Sam McGeown

on 20/7/2017

Written by Sam McGeown

on 20/7/2017Published under VMware and vRealize Automation

As a consultant I’ve had the opportunity to design, install and configure dozens of production vRealize Automation deployments, from reasonably small Proof of Concept environments to globally-scaled multi-datacenter fully distributed behemoths. It’s fair to say, that I’ve made mistakes along the way - and learned a lot of lessons as to what makes a deployment a success.

In the end, pretty much everything comes down to getting the pre-requisites right. Nothing that I’ve written here is not already documented in the official documentation, and the installation wizard does a huge amount of the work for you.

As a consultant I’ve had the opportunity to design, install and configure dozens of production vRealize Automation deployments, from reasonably small Proof of Concept environments to globally-scaled multi-datacenter fully distributed behemoths. It’s fair to say, that I’ve made mistakes along the way - and learned a lot of lessons as to what makes a deployment a success.

In the end, pretty much everything comes down to getting the pre-requisites right. Nothing that I’ve written here is not already documented in the official documentation, and the installation wizard does a huge amount of the work for you. Written by Sam McGeown

on 6/4/2017

Written by Sam McGeown

on 6/4/2017I already have a vRealize Orchestrator workflow to shutdown my workload cluster. What I want to do is trigger that by a voice command from Alexa.

Now, the correct and proper thing to do here would be to create a new Alexa skill, write the function in Lambda and connect that to my Orchestrator REST API and execute the workflow. That way I could control the “intents” and “utterances” and have verbal feedback.

Written by Sam McGeown

on 19/12/2016

Written by Sam McGeown

on 19/12/2016One of the cool new features released with vRealize Automation 7.2 was the integration of VMware Admiral (container management) into the product, and recently VMware made version 1 of vSphere Integrated Containers generally available (GA), so I thought it was time I started playing around with the two.

In this article I’m going to cover deploying VIC to my vSphere environment and then adding that host to the vRA 7.2 container management.

Written by Sam McGeown

on 18/11/2016

Written by Sam McGeown

on 18/11/2016Published under VMware and vRealize Automation

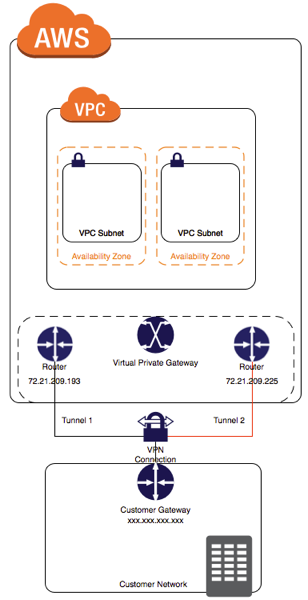

Recently I’ve been working on some ideas in my lab to leverage the AWS endpoint on vRealize Automation. One of the things I needed to get working was getting Software Components working on my AWS deployed instances.

The diagram to the right shows my end-stage network - the instance deployed by vRA into AWS should be in a private subnet in my VPC, and should use my local lab DNS server and be able to access my vRA instance.

Written by Sam McGeown

on 7/11/2016

Written by Sam McGeown

on 7/11/2016Published under VMware and vRealize Automation

When you’re working with Amazon and vRealize Automation Software Components, one of the requirements is for the Guest Agent (gugent) to talk back to the vRealize Automation APIs - the gugent polls the API for tasks it should perform, downloads them from the API and executes them, then updates the tasks with a status.

This means that Virtual Machines deployed as EC2 instances in an AWS VPC require the ability to talk back to internal corporate networks - not something you’d want to publish on the internet!

When you’re working with Amazon and vRealize Automation Software Components, one of the requirements is for the Guest Agent (gugent) to talk back to the vRealize Automation APIs - the gugent polls the API for tasks it should perform, downloads them from the API and executes them, then updates the tasks with a status.

This means that Virtual Machines deployed as EC2 instances in an AWS VPC require the ability to talk back to internal corporate networks - not something you’d want to publish on the internet! Written by Sam McGeown

on 28/7/2016

Written by Sam McGeown

on 28/7/2016Published under VMware and vRealize Automation

Although it’s fairly limited, you can add AWS as an endpoint for vRealize Automation 7 and consume EC2 AMIs as part of a blueprint. You can even add the deployed instances to an existing Elastic Load Balancer at deploy time. In this post I’ll run through the basics to get up and running and deploy your first highly available (multiple Availability Zone, load balanced) blueprint.

Preparing AWS for use as a vRA endpoint There are some obvious pre-requisites for attaching an AWS endpoint - for example, you need to have a VPC configured.

Written by Sam McGeown

on 19/4/2016

Written by Sam McGeown

on 19/4/2016Big thanks to Jose Luis Gomez for this solution, his response to my tweet was spot on and invaluable!

I’ve been trying to configure vCloud Air as a vCloud Director host in vRealize Orchestrator in order to create some custom resource actions for Day 2 operations in vRealize Automation. What I found was that there’s *very* little information out there on how to do this, and I ended up writing my own custom resource mapping for the virtual machines to VCAC:VirtualMachine objects - at least that way I could add my resource action.

Written by Sam McGeown

on 30/3/2016

Written by Sam McGeown

on 30/3/2016<img class=“alignright size-thumbnail wp-image-6186” src="/images/2015/07/vRA-Product-Icon-Mac_0.png" alt=“vRA” width=“150” height=“150” - a properties object.

I wanted to create a workflow that I could enable to log all of the keys, values and types of the properties object for each stage of the vRA7 MachineProvisioning workflows, and create a reference for myself on the payload for each stage.

To do this I created a new workflow “debugProperties” and added an input variable called “payload”, type Properties.

Written by Sam McGeown

on 16/3/2016

Written by Sam McGeown

on 16/3/2016VMware KB2140539 where requesting an XaaS (vRealize Orchestrator) blueprint fails with:

Failed to retrieve form from provider

The KB describes it occuring when “more than one VMware vRealize Orchestrator instance is configured for different tenants”. The issue I faced is not the same - in my case, I had the system default tenant configured to use the embedded vRO, and the customer tenant configured to use the system default (which would be the embedded vRO!

Written by Sam McGeown

on 9/3/2016

Written by Sam McGeown

on 9/3/2016The new Event Broker service in vRA7 is one of the most exciting features of this latest release, the possibilities for extensibility are huge. At this point it time you can still use the old method of using workflow stubs to customise machine lifecycle events, but at some point in the future this will be deprecated and the Event Broker will be the only way to extend.

With this in mind, I wanted to use the Event Broker to do something that I am asked on almost every customer engagement - custom hostnames beyond what the Machine Prefixes mechanism can do.