vrealize automation

Written by Simon Eady

on 18/3/2021

Written by Simon Eady

on 18/3/2021Published under vRealize Automation

Integrating vROps with vRA 8 using Workspace One Auth Source Product Version - vRealize Automation 8.x Why integrate vROps with vRA 8? vRealize Automation can work with vRealize Operations Manager to perform advanced workload placement, provide deployment health and virtual machine metrics, and display pricing.

So what is the problem? When configuring the integration, you will input the vROps URL and it will also ask you to input a username and password of the service account you wish to use.

Integrating vROps with vRA 8 using Workspace One Auth Source Product Version - vRealize Automation 8.x Why integrate vROps with vRA 8? vRealize Automation can work with vRealize Operations Manager to perform advanced workload placement, provide deployment health and virtual machine metrics, and display pricing.

So what is the problem? When configuring the integration, you will input the vROps URL and it will also ask you to input a username and password of the service account you wish to use. Written by Simon Eady

on 21/2/2021

Written by Simon Eady

on 21/2/2021Published under vRealize Automation

Why use Content Libraries with vRA 8 Product Version - vRealize Automation 8.x What are vSphere Content Libraries? A content library stores and manages content in the form of library items. A single library item can consist of one file or multiple files. For example, the OVF template is a set of files (.ovf, .vmdk, and .mf). When you upload an OVF template to the library, you upload the entire set of files, but the result is a single library item of the OVF Template type.

Why use Content Libraries with vRA 8 Product Version - vRealize Automation 8.x What are vSphere Content Libraries? A content library stores and manages content in the form of library items. A single library item can consist of one file or multiple files. For example, the OVF template is a set of files (.ovf, .vmdk, and .mf). When you upload an OVF template to the library, you upload the entire set of files, but the result is a single library item of the OVF Template type. Written by Simon Eady

on 26/1/2019

Written by Simon Eady

on 26/1/2019Published under Career

It has been a while since I have had time to write a blog post, the last quarter of last year was pretty crazy from a work point of view.

Regardless, it is now a New Year and my tech focus is turning very much on CMP related things particularly vRealize Automation. (I am also very much looking forward to learning more about VMware’s CaS which I saw demo’d at the UK VMUG late last year by Grant Orchard)

It has been a while since I have had time to write a blog post, the last quarter of last year was pretty crazy from a work point of view.

Regardless, it is now a New Year and my tech focus is turning very much on CMP related things particularly vRealize Automation. (I am also very much looking forward to learning more about VMware’s CaS which I saw demo’d at the UK VMUG late last year by Grant Orchard) Written by Sam McGeown

on 18/4/2018

Written by Sam McGeown

on 18/4/2018Published under VMware

When vRealize Lifecycle Manager 1.2 was released recently, I was keen to get it installed in my lab, since I maintain several vRealize Automation deployments for development and testing, as well as performing upgrades. With vRLCM I can reduce the administrative overhead of managing the environments, as well as easily migrate content between environments (I’ll be blogging on some of these cool new features soon).

However, I hit a snag when I began to import my existing environment - I couldn’t get the vCenter data collection to run.

When vRealize Lifecycle Manager 1.2 was released recently, I was keen to get it installed in my lab, since I maintain several vRealize Automation deployments for development and testing, as well as performing upgrades. With vRLCM I can reduce the administrative overhead of managing the environments, as well as easily migrate content between environments (I’ll be blogging on some of these cool new features soon).

However, I hit a snag when I began to import my existing environment - I couldn’t get the vCenter data collection to run. Written by Sam McGeown

on 26/3/2018

Written by Sam McGeown

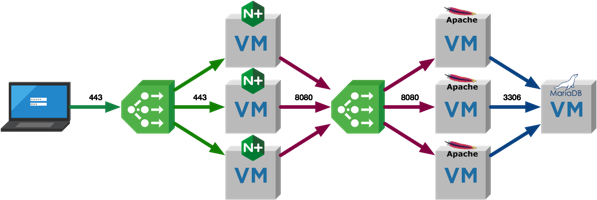

on 26/3/2018 One question I’m asked quite a lot is what I use for a 3-tier application when I’m testing things like NSX micro-segmentation with vRealize Automation. The simple answer is that I used to make something up as I went along, deploying components by hand and generally repeating myself a lot. I had some cut/paste commands in my note application that sped things up a little, but nothing that developed. I’ve been meaning to rectify this for a while, and this is the result!

One question I’m asked quite a lot is what I use for a 3-tier application when I’m testing things like NSX micro-segmentation with vRealize Automation. The simple answer is that I used to make something up as I went along, deploying components by hand and generally repeating myself a lot. I had some cut/paste commands in my note application that sped things up a little, but nothing that developed. I’ve been meaning to rectify this for a while, and this is the result! Written by Sam McGeown

on 19/1/2018

Written by Sam McGeown

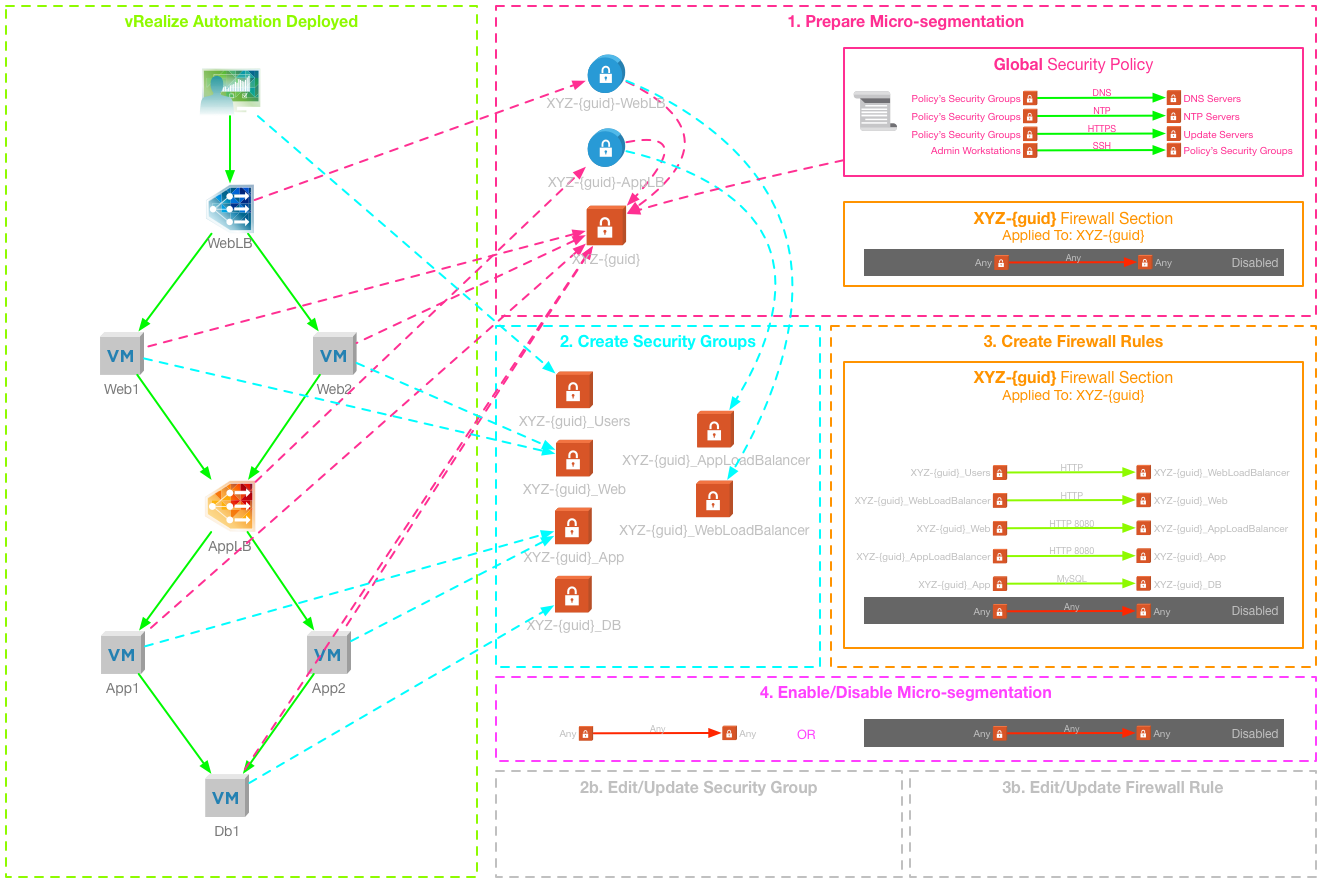

on 19/1/2018 vRealize Automation and NSX integration has introduced the ability to deploy multi-tiered applications with network services included. The current integration also enables a method to deploy micro-segmentation out of the box, based on dynamic Security Group membership and the Service Composer. This method does have some limitations, and can be inflexible for the on-going management of deployed applications. It requires in-depth knowledge and understanding of NSX and the Distributed Firewall, as well as access to the Networking and Security manager that is hosted by vCenter Server.

vRealize Automation and NSX integration has introduced the ability to deploy multi-tiered applications with network services included. The current integration also enables a method to deploy micro-segmentation out of the box, based on dynamic Security Group membership and the Service Composer. This method does have some limitations, and can be inflexible for the on-going management of deployed applications. It requires in-depth knowledge and understanding of NSX and the Distributed Firewall, as well as access to the Networking and Security manager that is hosted by vCenter Server. Written by Simon Eady

on 14/9/2017

Written by Simon Eady

on 14/9/2017Published under Community

This was as I expected, the busiest day sessions wise for me. There was so much good stuff I had to be a little ruthless on what I wanted and or needed to attend while also wanting to get into the solutions exchange.

Also at the end of the day the customer appreciate party was scheduled so that was something I was looking forward to a great deal (Hint - Kaiser Chiefs)

This was as I expected, the busiest day sessions wise for me. There was so much good stuff I had to be a little ruthless on what I wanted and or needed to attend while also wanting to get into the solutions exchange.

Also at the end of the day the customer appreciate party was scheduled so that was something I was looking forward to a great deal (Hint - Kaiser Chiefs) Written by Sam McGeown

on 20/7/2017

Written by Sam McGeown

on 20/7/2017Published under VMware and vRealize Automation

As a consultant I’ve had the opportunity to design, install and configure dozens of production vRealize Automation deployments, from reasonably small Proof of Concept environments to globally-scaled multi-datacenter fully distributed behemoths. It’s fair to say, that I’ve made mistakes along the way - and learned a lot of lessons as to what makes a deployment a success.

In the end, pretty much everything comes down to getting the pre-requisites right. Nothing that I’ve written here is not already documented in the official documentation, and the installation wizard does a huge amount of the work for you.

As a consultant I’ve had the opportunity to design, install and configure dozens of production vRealize Automation deployments, from reasonably small Proof of Concept environments to globally-scaled multi-datacenter fully distributed behemoths. It’s fair to say, that I’ve made mistakes along the way - and learned a lot of lessons as to what makes a deployment a success.

In the end, pretty much everything comes down to getting the pre-requisites right. Nothing that I’ve written here is not already documented in the official documentation, and the installation wizard does a huge amount of the work for you. Written by Sam McGeown

on 14/3/2017

Written by Sam McGeown

on 14/3/2017Published under VMware

In this humble consultant’s opinion, Log Insight is one of the most useful tools in the administrator’s tool belt for troubleshooting vRealize Automation. I have lost count of the number of times I’ve been asked to help troubleshoot an issue that, when asked, people don’t know which log they should be looking at. The simple fact is that vRealize Automation has a lot of log files. Correlating these log sources to provide an overall picture is a painful, manual process - unless you have Log Insight!

Written by Sam McGeown

on 19/12/2016

Written by Sam McGeown

on 19/12/2016One of the cool new features released with vRealize Automation 7.2 was the integration of VMware Admiral (container management) into the product, and recently VMware made version 1 of vSphere Integrated Containers generally available (GA), so I thought it was time I started playing around with the two.

In this article I’m going to cover deploying VIC to my vSphere environment and then adding that host to the vRA 7.2 container management.