Lab Guide - Kubernetes Load Balancer and Ingress with MetalLB and Contour

I run quite a few applications in Docker as part of my home network - there’s a small selection below, but at any one time there might be 10-15 more apps I’m playing around with:

- plex - Streaming media server

- unifi - Ubiquiti Network Controller

- homebridge - Apple Homekit compatible smart home integration

- influxdb - Open source time series database

- grafana - Data visualization & Monitoring

- pihole - internet tracking and ad blocker

- vault - Hashicorp secret management

Until recently a single PhotonOS VM with Docker was all I needed to run - everything shared the same host IP, stored it’s configuration locally or on an NFS mount and generally ran fine. However, my wife and kids have become more dependant on plex, and homebridge (which I use to control the air conditioning in my house), and if they’re down, it’s a problem. So, I embarked on a little project to provide some better availability, and learn a little in the process.

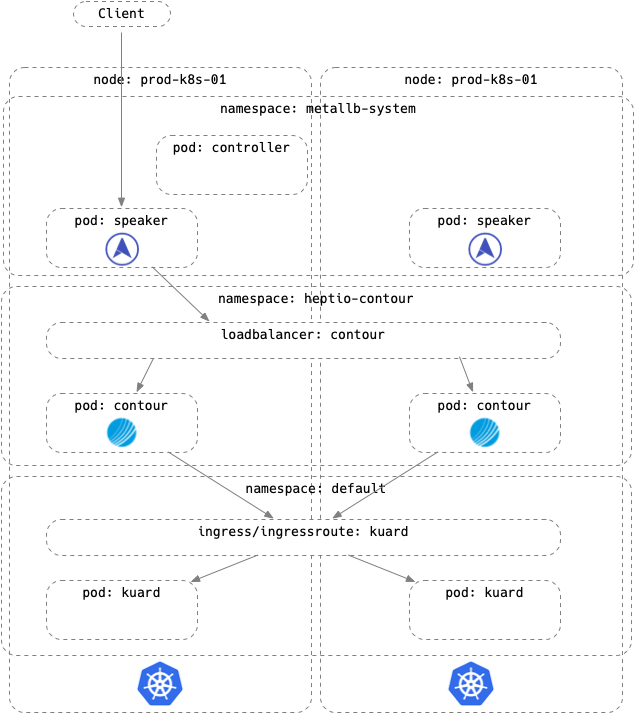

I’ve deployed a 2-node Kuberenetes cluster based on two Ubuntu 18.04 VMs, and configured Flannel as my CNI. I’m going to assume you can do this by yourself, if you can’t, this might not be the place to start! On top of this I want to deploy two add ons - MetalLb and Contour. MetalLb will provide load balancing, and Contour will provide an Ingress controller to steer traffic based on policy.

Install and Configure MetalLB

What is MetalLb?

MetalLB hooks into your Kubernetes cluster, and provides a network load-balancer implementation. In short, it allows you to create Kubernetes services of type “LoadBalancer” in clusters that don’t run on a cloud provider, and thus cannot simply hook into paid products to provide load-balancers.

Installing MetalLb

Installing MetalLB is really, really easy - I deployed it as a kubernetes manifest (or you can use a helm chart). It creates a Namespace, metallb-system, a controller Deployment metallb-system/controller, a Daemonset metallb-system/speaker as well as ClusterRoles, ClusterRoleBindings, and ServiceAccounts to support the application.

| |

You can inspect the created objects by using kubectl get commands, with the namespace selector:

| |

You can see that MetalLb has deployed a single controller and two speakers (one per node). Installed but unconfigured, it will sit there doing nothing, so lets configure it!

Configuring MetalLb in Layer 2 mode

As I mentioned before, MetalLb can run in two modes, Layer 2, or Layer 3 (BGP). Very simply, Layer 2 mode MetalLb uses a pool of IP addresses specified in the configuration and simply responds to ARP requests, claiming that additional IP on the “speaker” instance and forwarding traffic on to the kubernetes workloads.

In my lab, Layer 2 mode is more than enough for my needs, so I’m going to configure a pool of IPs to be consumed by kubernetes LoadBalancers.

| |

Install and configure Contour

What is Contour?

Contour is an Ingress controller for Kubernetes that works by deploying the Envoy proxy as a reverse proxy and load balancer. Unlike other Ingress controllers, Contour supports dynamic configuration updates out of the box while maintaining a lightweight profile.

Contour also introduces a new ingress API (IngressRoute) which is implemented via a Custom Resource Definition (CRD). Its goal is to expand upon the functionality of the Ingress API to allow for a richer user experience as well as solve shortcomings in the original design.

A great intro and demo of Contour by @stevesloka is available here, which I highly recommend you watch to understand how Contour works.

Installing Contour

Installation is as simple as applying a kubernetes manifest:

| |

To quote the official docs again, in addition to the IngressRoute CRD this command creates:

- A new namespace heptio-contour with two instances of Contour in the namespace

- A Service of type: LoadBalancer that points to the Contour instances

- Depending on your configuration, new cloud resources – for example, ELBs in AWS

In this deployment the LoadBalancer will be fulfilled by MetalLb based on the layer 2 configuration specified above.

You can inspect the created components by using the kubectl get command again:

| |

Note that the contour LoadBalancer has an external IP - this is the IP address that all the external traffic will go to, and then Contour will steer that traffic to the correct application based on the Ingress configuration.

Deploying an load balanced and ingress routed application

To test out the new load balanacer and ingress functionality, we can use the example application in the Contour docs - kuard. I’ve downloaded the manifest and dropped the number of replicas to two, as I’ve only got 2 kubernetes nodes running.

The manifest describes three kubernetes objects, a Deployment of the kuard application with 2 replicas, a Service to expose port 80 on a ClusterIp, and an Ingress that describes how the Envoy proxy should pass traffic to the Service.

So, when the manifest is applied, the two kuard containers are deployed and a service is configured. The Ingress is configured to pass traffic to the Service named “kuard” on port 80. First, we deploy the manifest:

| |

And then inspect the deployed components using kubectl get commands again:

| |

Note that there’s no external IP for the kuard service - instead it’s accessed via the Contour deployed Envoy proxy, which for me has an external IP of 192.168.10.201:

| |

Now if I hit up the load balanced IP, I see the kuard, and if I hit refresh you can see that I’m switching between the two container instances:

So…this is cool, but not very useful over and above basic load balancer functionality (and MetalLb could provide this on it’s own). The real magic of Contour is in the IngressRoute, and the ability to route incoming traffic based on the IngressRoute object, but that’s the subject of another blog post! There are some great examples in the contour examples folder on GitHub if you can’t wait :)